It may not be the most exciting thing in the world but, when it comes to website optimisation, your robots.txt file is important. Yet, for many website owners, this is not something they ever look at.

Yet, it really is something every webmaster should know about. Even if you leave it to your trusted SEO agency, you should understand just how vital this file is. Here are 5 things you should know about your robots.txt file.

Disallow Parts Of The Site

Disallowing pages is one of the fundamental purposes of a robots.txt file. In short, it suggests that Google’s crawlbots don’t index any given page.

Of course, this is just a suggestion and Google may very still index any given page. This is especially true if external links point towards it. This causes the page to get indexed regardless, leading to some interesting situations wherein Google doesn’t read the page for content (such as meta descriptions) due to the txt file, but indexes it anyway due to external links. In these situations, you can either re-allow the page or disavow the links causing it to appear.

Similarly, you can’t use this system to hide content from Google. Got repetitive content on your page? Google has some very sophisticated ways of determining duplicate content. Likewise, don’t disallow pages that are being redirected, as this will have a negative impact on the pages they are redirecting to.

Noindex

Another way to try and remove pages is to simply use the noindex feature and tell Google to not index a given page. Again, there is the same issue of pages being linked to externally, brining it to Google’s attention nonetheless.

However, this is still useful. Most websites have pages that they simply don’t want or need to rank in search results. Technical pages, such as those commonly found in the footer of many websites, are a prime example.

Fine Tune Robot Access

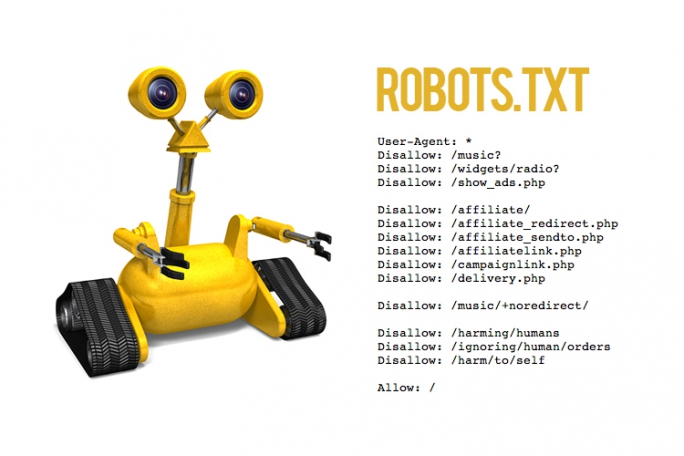

Search engines don’t use the same robot. Any website that has an indexing feature often uses a custom robot to do so. As such, the robots.txt file states what robots can and cannot read – but it also does it this by specific robots.

In the file, these are refereed to as user agents. For short hand, the following:

User-agent: *

Disallow:/images/

Means all robots are disallowed from the robots folder. However, replacing * with a specific bot (such as User-agent: Googlebot) will let you give bot-specific instructions.

You Can Have Lots Of Fun With It!

Few people look at robots.txt files but those that do often tend to be very creative people. Why not treat your file – which is easily accessible via a simple suffix of domain.com/robots.txt – like a 404 file? Be creative and have fun with it!

Some sites use it to recruit technical experts, while others use it show a company logo (through ASCII and other text-based methods). You can even just use it to show a little humour.

As you can see, the robots.txt file, while simple, can do quite a lot. Because of this, it is something that is never worth overlooking!